Abstract

Skin images from real-world clinical practice are often limited, resulting in a shortage of training data for deep-learning models. While many studies have explored skin image synthesis, existing methods often generate low-quality images and lack control over the lesion's location and type.

To address these limitations, we present LF-VAR, a model leveraging quantified lesion measurement scores and lesion type labels to guide the clinically relevant and controllable synthesis of skin images. It enables controlled skin synthesis with specific lesion characteristics based on language prompts.

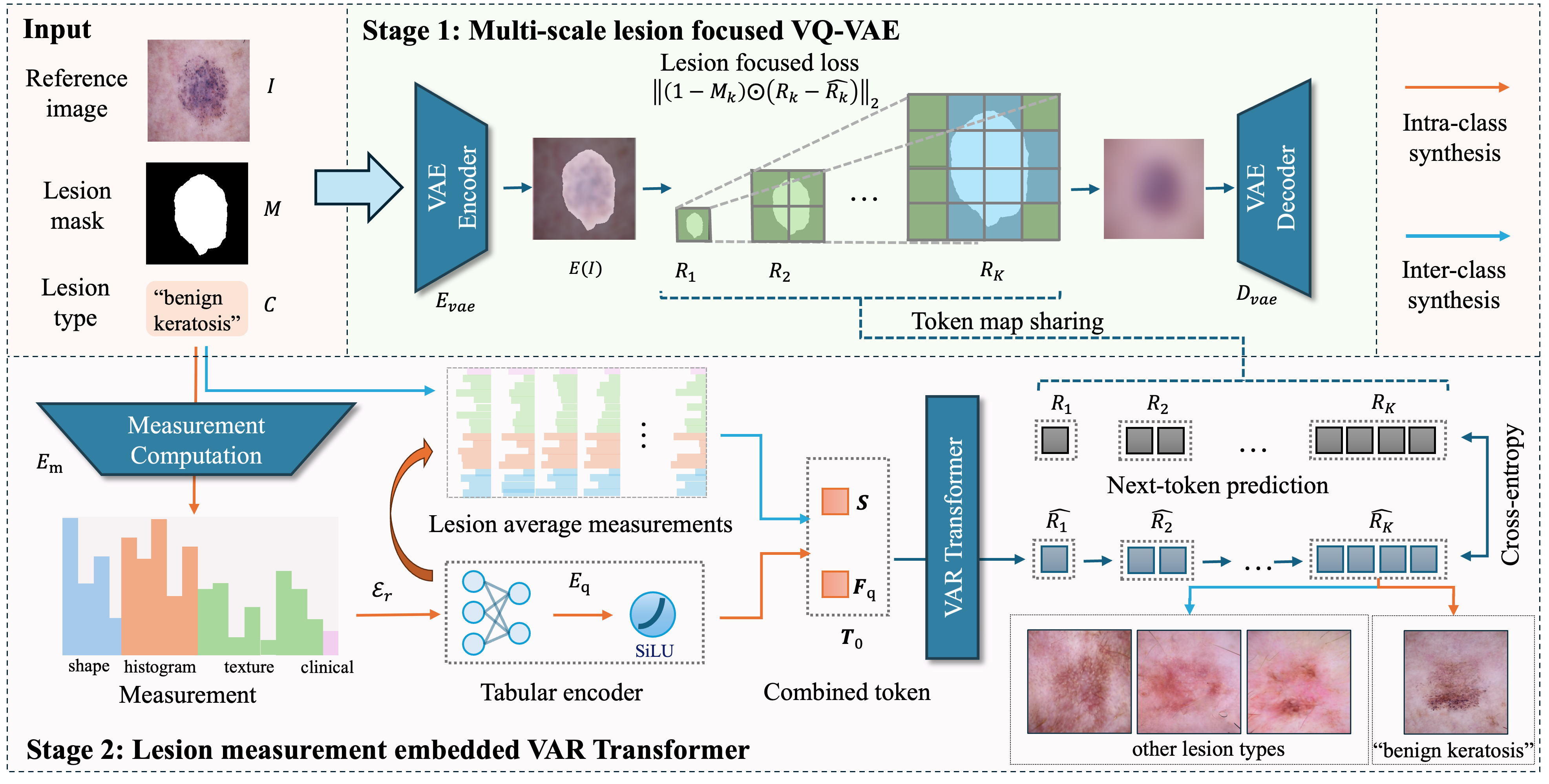

We train a multiscale lesion-focused Vector Quantised Variational Auto-Encoder (VQVAE) to encode images into discrete latent representations for structured tokenization. Then, a Visual AutoRegressive (VAR) Transformer trained on tokenized representations facilitates image synthesis. Lesion measurement from the lesion region and types as conditional embeddings are integrated to enhance synthesis fidelity.

Our method achieves the best overall FID score (average 0.74) among seven lesion types, improving upon the previous state-of-the-art (SOTA) by 6.3%.

Method Overview

Figure 1: Overall architecture of the LF-VAR model. The model consists of a lesion-focused VQVAE encoder-decoder and a conditional VAR transformer for controllable skin image synthesis.

Lesion-Focused VQVAE

Multiscale encoder-decoder architecture that captures lesion-specific features and generates discrete latent representations

Conditional VAR Transformer

Autoregressive transformer trained on tokenized representations with lesion type and radiomics features as conditional embeddings

Controlled Synthesis

Language-guided generation with precise control over lesion characteristics and spatial location

Results & Performance

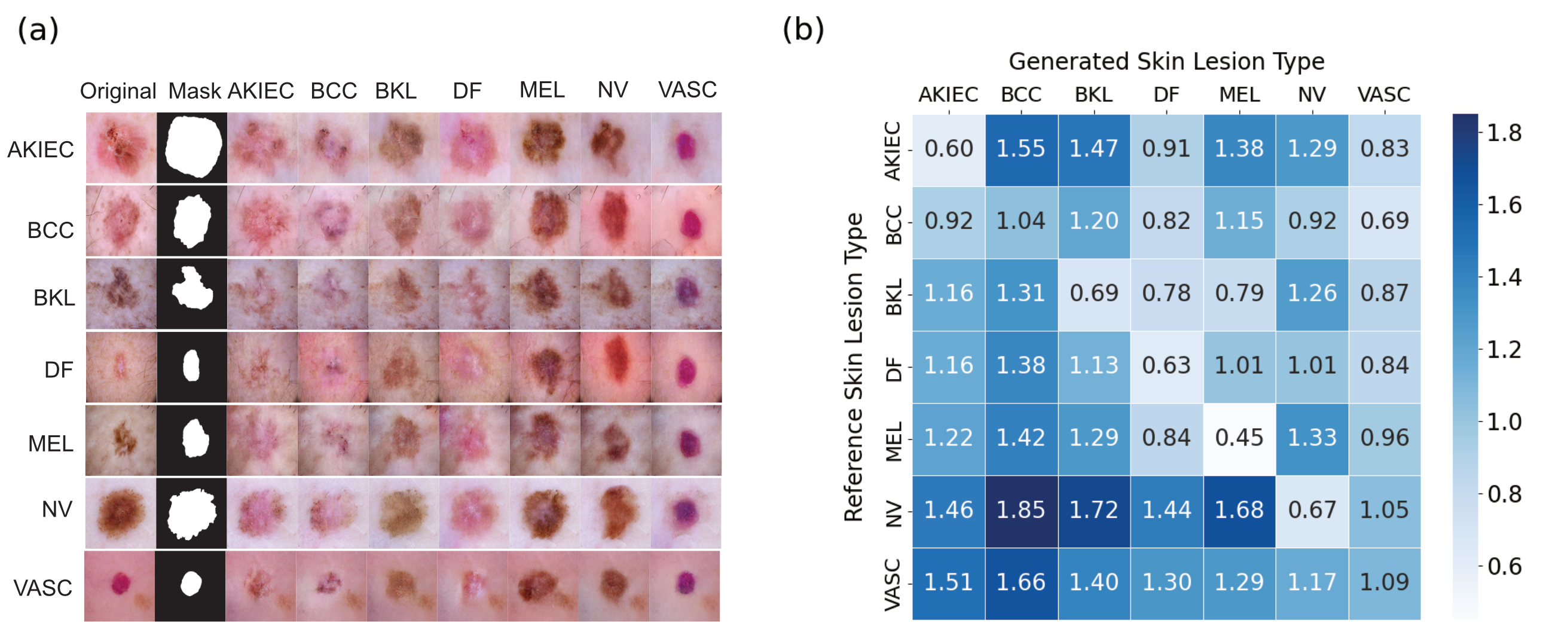

Figure 2: Inter-class synthesis results and FID matrix for seven disease classes, demonstrating the model's ability to generate high-quality skin images across different lesion types.

Quantitative Results Comparison

| Method | Prompt | Metric | AKIEC | BCC | BKL | DF | MEL | NV | VASC | Average |

|---|---|---|---|---|---|---|---|---|---|---|

| MAGE | ✗ | IS ↑ | 3.08 ± 0.13 | 3.18 ± 0.12 | 3.01 ± 0.15 | 2.96 ± 0.21 | 2.91 ± 0.16 | 2.98 ± 0.14 | 3.08 ± 0.11 | 3.03 ± 0.15 |

| FID ↓ | 12.92 | 11.86 | 9.84 | 12.18 | 8.49 | 14.97 | 16.34 | 12.37 | ||

| MAGE Adapter | ✗ | IS ↑ | 2.49 ± 0.05 | 1.79 ± 0.09 | 1.98 ± 0.05 | 2.84 ± 0.14 | 2.38 ± 0.09 | 2.66 ± 0.10 | 2.67 ± 0.16 | 2.40 ± 0.10 |

| FID ↓ | 2.33 | 7.99 | 2.65 | 3.09 | 5.82 | 3.24 | 3.28 | 4.06 | ||

| Derm T2IM | Text | IS ↑ | 3.74 ± 0.19 | 4.23 ± 0.17 | 3.94 ± 0.39 | 5.03 ± 0.31 | 3.59 ± 0.29 | 4.74 ± 0.30 | 4.69 ± 0.46 | 4.28 ± 0.30 |

| FID ↓ | 6.52 | 6.02 | 3.32 | 5.31 | 4.74 | 6.26 | 5.69 | 5.41 | ||

| Diffusion | Mask | IS ↑ | 3.90 ± 0.20 | 4.88 ± 0.25 | 3.12 ± 0.12 | 4.04 ± 0.18 | 4.22 ± 0.30 | 3.96 ± 0.25 | 3.10 ± 0.09 | 3.89 ± 0.20 |

| FID ↓ | 1.22 | 1.47 | 0.78 | 1.15 | 0.90 | 1.57 | 0.64 | 1.11 | ||

| VAR | Text-Mask | IS ↑ | 3.09 ± 0.14 | 2.57 ± 0.12 | 3.27 ± 0.19 | 2.58 ± 0.08 | 2.76 ± 0.11 | 2.37 ± 0.10 | 2.48 ± 0.15 | 2.73 ± 0.13 |

| FID ↓ | 0.74 | 1.58 | 0.44 | 0.76 | 0.44 | 0.56 | 1.04 | 0.79 | ||

| Ours (LF-VAR) | Text-Mask | IS ↑ | 3.27 ± 0.13 | 2.41 ± 0.09 | 3.26 ± 0.12 | 2.34 ± 0.11 | 2.84 ± 0.08 | 2.63 ± 0.13 | 3.92 ± 0.08 | 2.95 ± 0.10 |

| FID ↓ | 0.60 | 1.04 | 0.69 | 0.63 | 0.45 | 0.56 | 1.09 | 0.74 |

Legend: Bold = Best performance, Underline = Second best performance

Metrics: IS (Inception Score) ↑ = Higher is better, FID (Fréchet Inception Distance) ↓ = Lower is better

Prompt Types: ✗ = No prompt, Text = Text prompt, Mask = Mask prompt, Text-Mask = Text + Mask prompt

Code & Resources

Quick Start

git clone https://github.com/echosun1996/LF-VAR.git

cd LF-VAR

./main.sh 2 # Download Dataset

./main.sh m # Run LF-VAR

Citation

@InProceedings{SunJia_Controllable_MICCAI2025,

author = { Sun, Jiajun and Yu, Zhen and Yan, Siyuan and Ong, Jason J. and Ge, Zongyuan and Zhang, Lei},

title = { { Controllable Skin Synthesis via Lesion-Focused Vector Autoregression Model } },

booktitle = {proceedings of Medical Image Computing and Computer Assisted Intervention -- MICCAI 2025},

year = {2025},

publisher = {Springer Nature Switzerland},

volume = {LNCS 15975},

month = {September},

page = {128 -- 138}

}